AI Pangaea: Unifying Intelligence Islands for Adapting Myriad Tasks

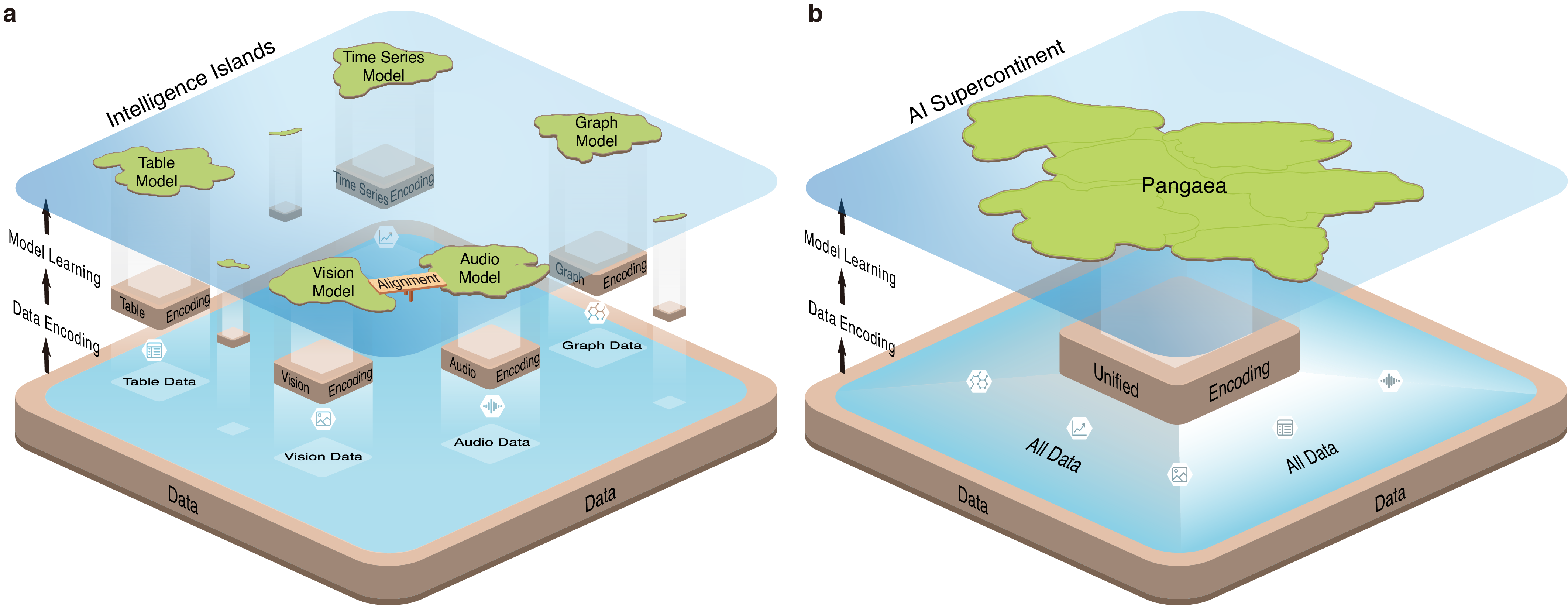

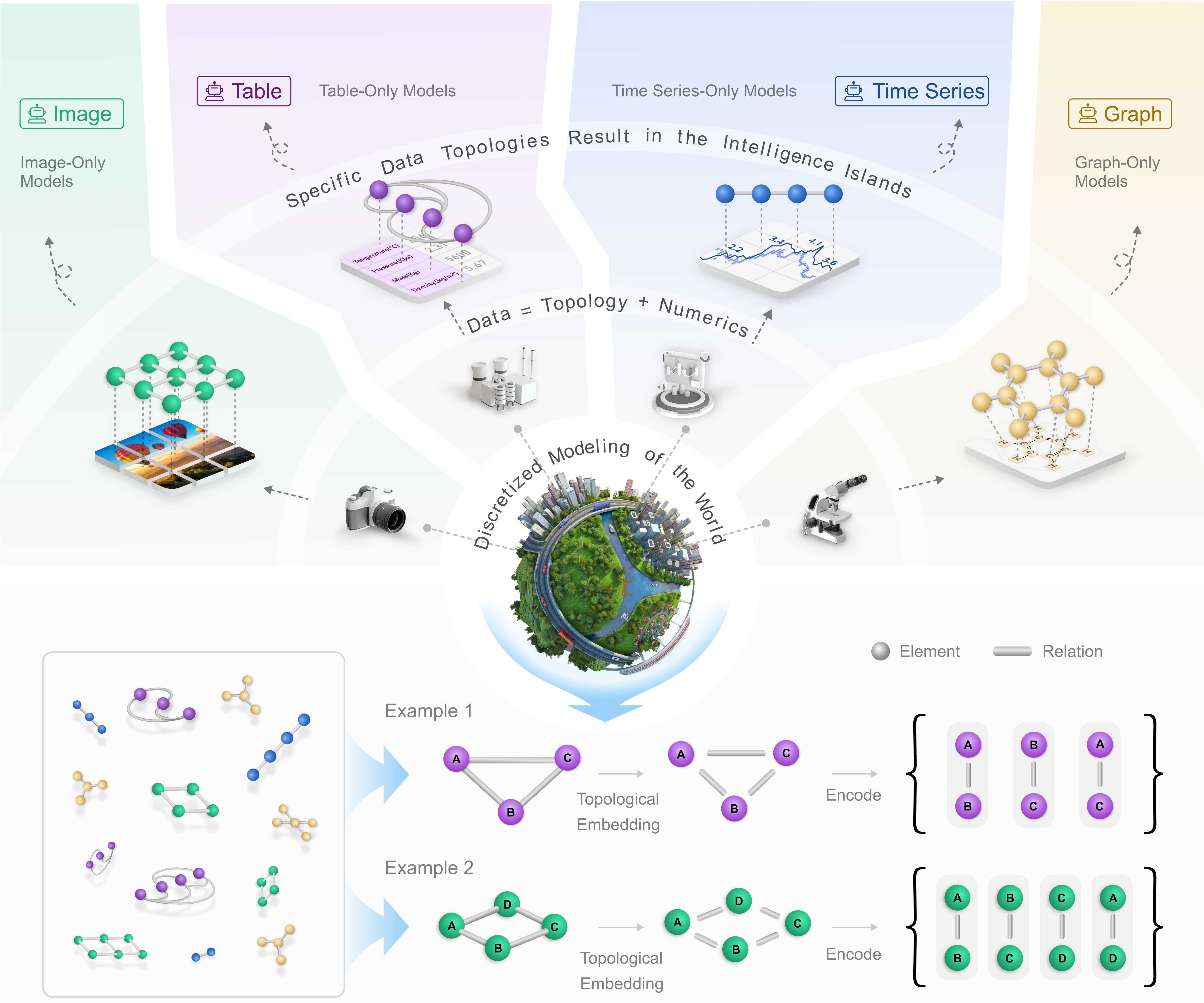

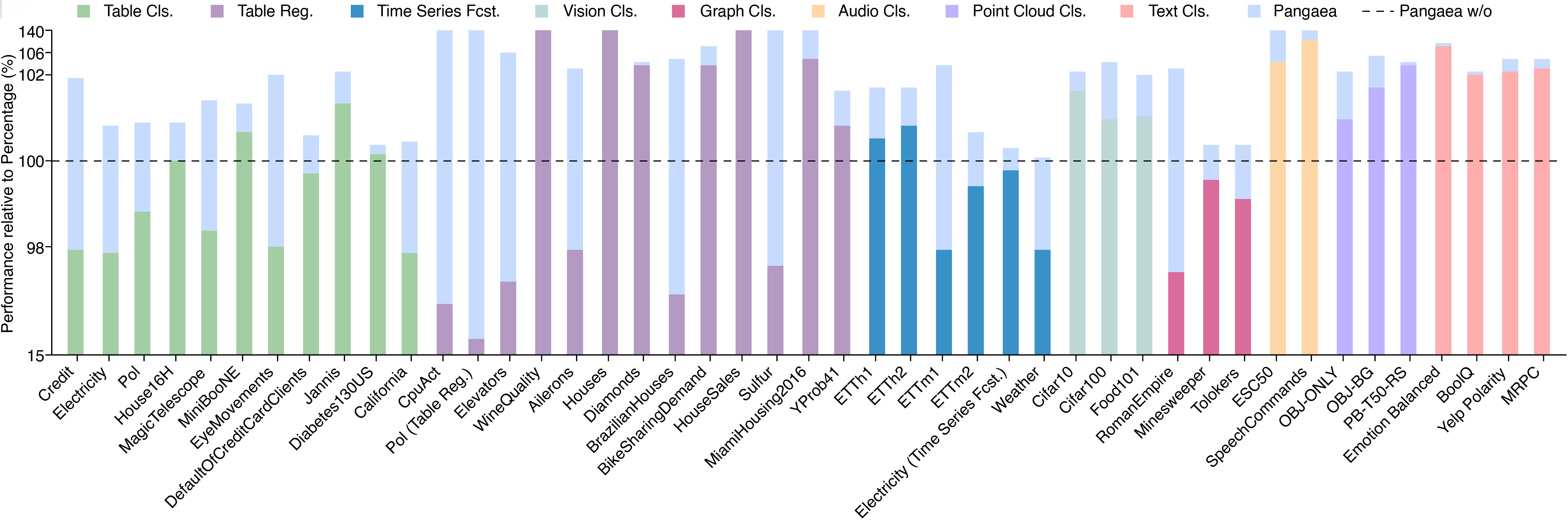

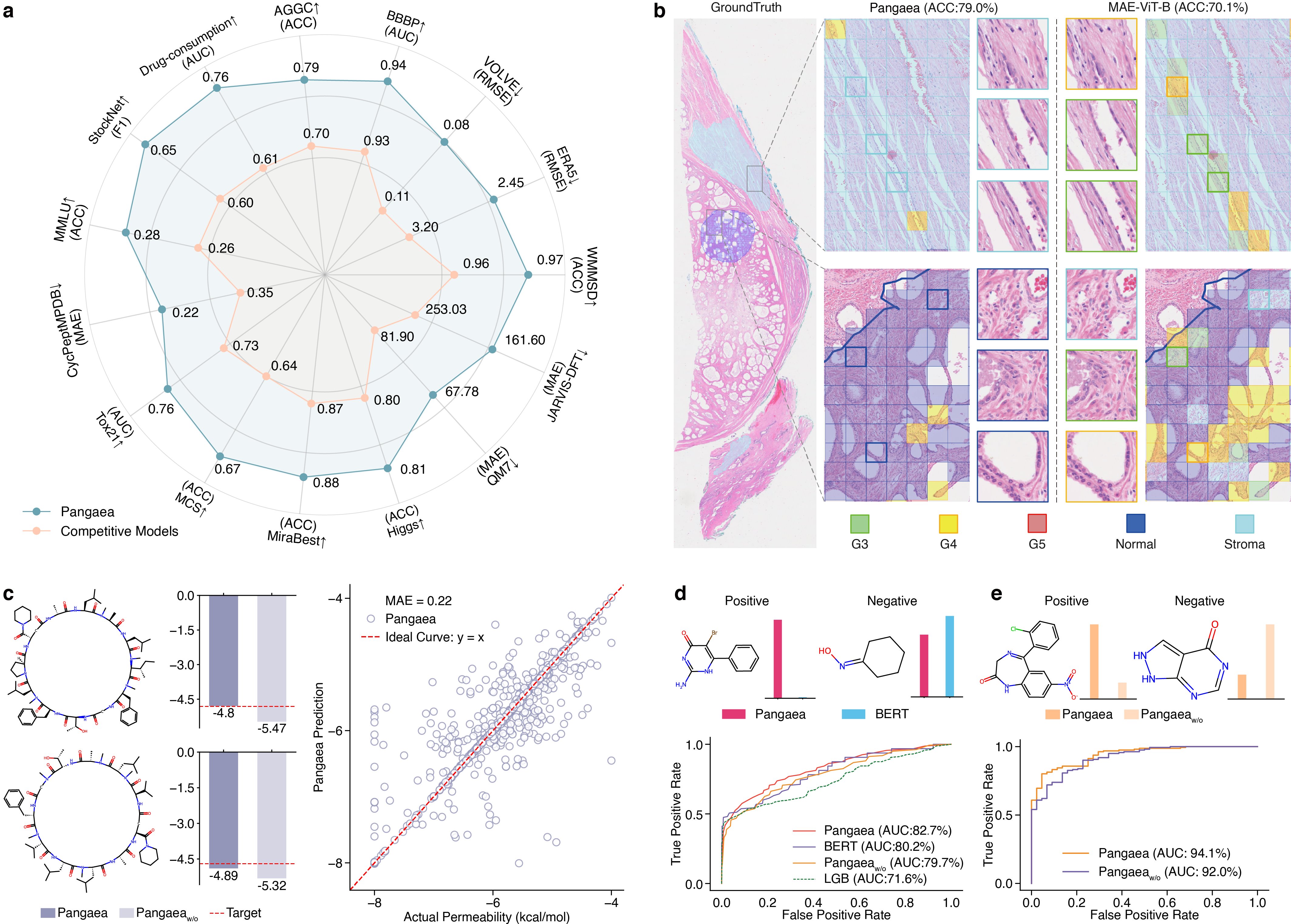

The pursuit of artificial general intelligence (AGI) continuously demands generalization in one model across myriad tasks, even those not seen before. However, current AI models are isolated from each other for being limited to specific tasks, now first defined as Intelligence Islands. To unify Intelligence Islands into one, we propose Pangaea, the first AI supercontinent akin to the geological Pangaea. Pangaea encodes any data into a unified format and accumulates universal knowledge through pre-training on 296 datasets across diverse modalities. Eventually, it demonstrates remarkable generalization across 45 general tasks and 15 scientific tasks encompassing a wide range of scientific subjects. By investigating Pangaea deeper, the scaling effect of modality is revealed, quantifying the universal knowledge accumulation across modalities as the cumulative distribution function of a geometric distribution. On the whole, Pangaea shows strong potential to handle myriad tasks, indicating a new direction toward AGI.

✨ Highlights

🗂️ Unified Data Encoding

A unified triplet-based representation that maps diverse modalities into a shared space.

🔄 Cross-Modal Learning

Unified learning across multiple modalities, accumulating universal knowledge.

🔗 Knowledge Transfer

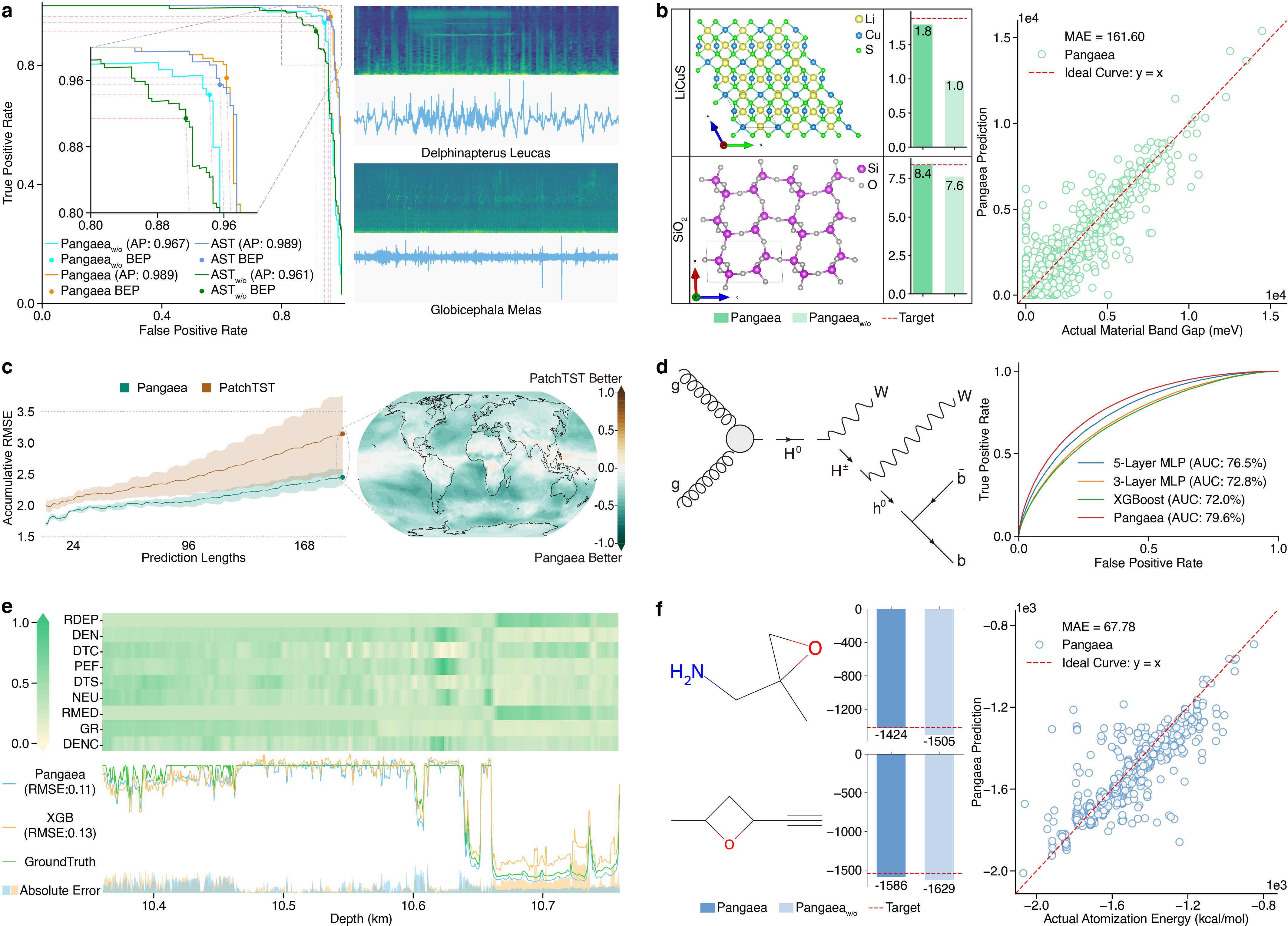

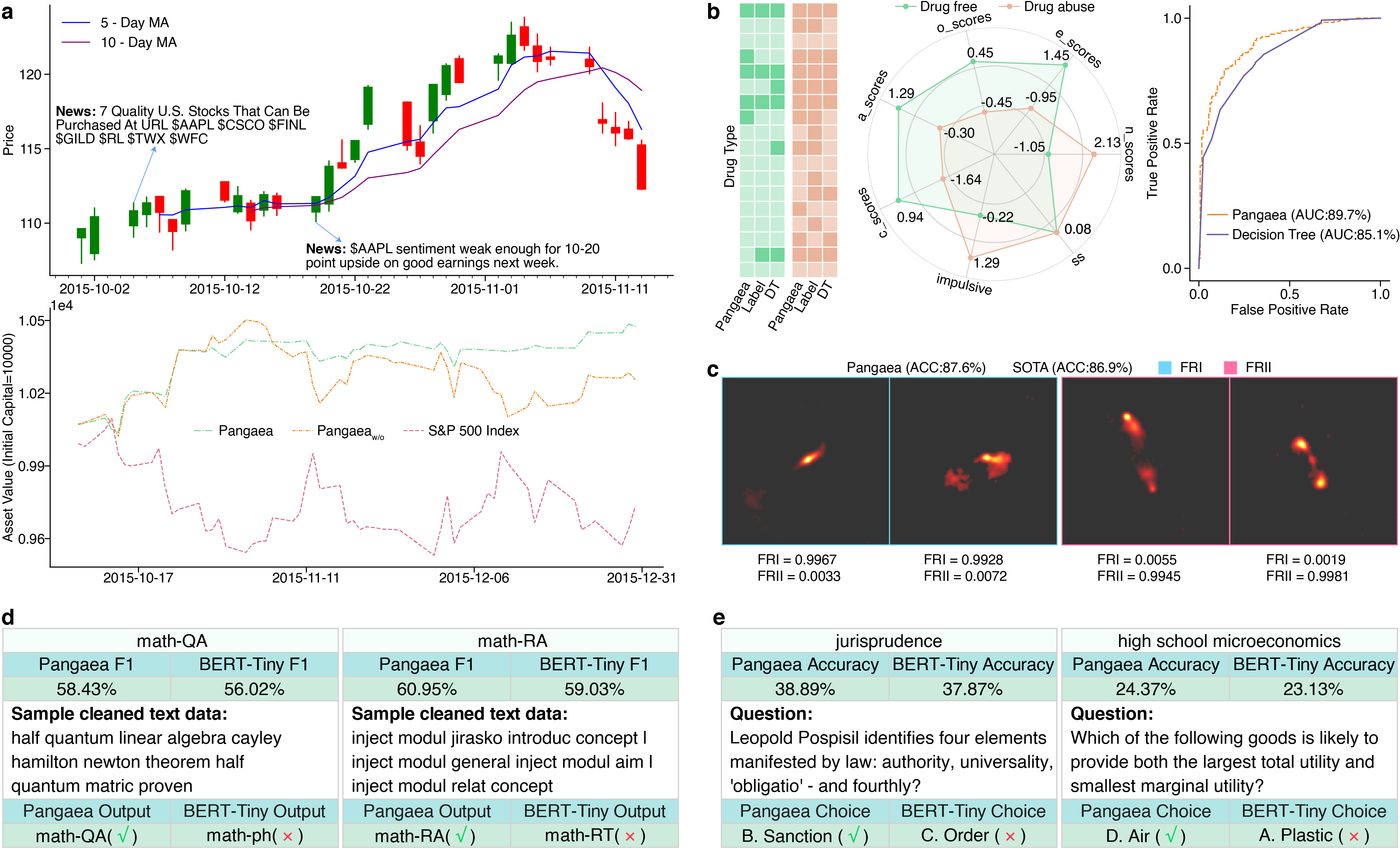

The universal knowledge learned from pre-training benefits downstream tasks.

🧪 Scientific Assessment

Empirical validation of 15 scientific tasks spanning diverse scientific subjects.

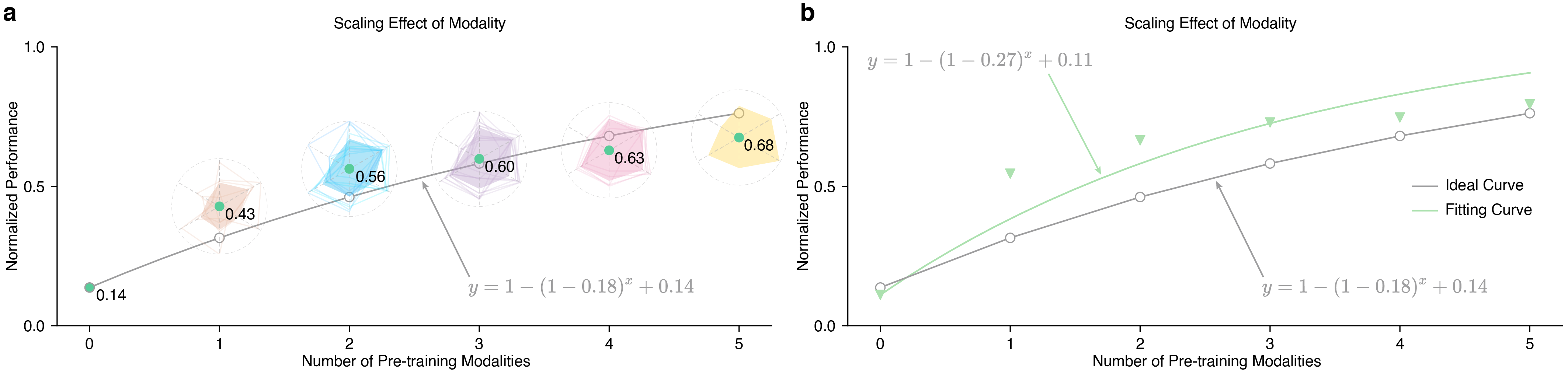

📈 Scaling Effect

Revealing new scaling behaviors when expanding across multiple modalities.

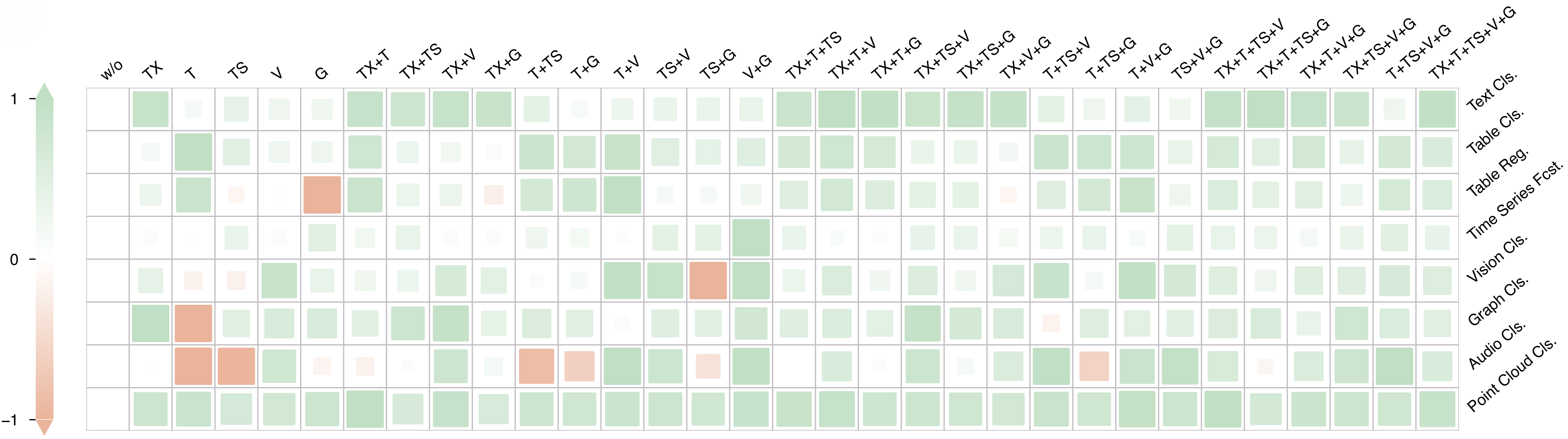

💞 Modality Affinity

Different modality combinations contribute to varying degrees of gains.

🗂️ Unified Data Encoding

🔄 Cross-Modal Learning

🔗 Knowledge Transfer

🧪 Scientific Assessment

📈 Scaling Effect

💞 Modality Affinity

📚 Citation

If you find Pangaea useful for your research, please cite our work:

@misc{chang2025aipangaeaunifyingintelligence,

title={AI Pangaea: Unifying Intelligence Islands for Adapting Myriad Tasks},

author={Jianlong Chang and Haixin Wang and Zhiyuan Dang and Li Huang and Zhiyu Wang and Ruoqi Cao and Shihao Piao and Dongzhe Li and Dianyu Gao and Dongsheng Wang and Yin Li and Jinan Sun and Lu Fang and Zhouchen Lin},

year={2025},

eprint={2509.17460},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2509.17460},

}